In imagining a universe filled with an invisible substance, it is natural to use air as an analogy. We then run immediately into trouble with Newton’s first law of motion, which is also an assumption in Einstein’s theories:

Every object in a state of uniform motion tends to remain in that state unless acted upon by an external force.

We know that air actively slows the movement of objects passing through it. Why aren’t moving objects slowed as they pass through Dark Energy?

One way around the problem is to assert that Dark Energy is a wall-flower: it doesn’t interact with anything else. That’s a prevalent assumption, and it causes me to remember the early history of thermodynamics. In building a theory of heat, early investigators, noticing that heat moved from place to place without changing the substance it occupied, conceived of caloric, an invisible field that permeated the spaces between atoms. That didn’t have much explanatory power, and was rapidly replaced by theories that explained heat as disordered motion of atoms.

Astrophysicists tell us that the universe is a pretty cold place – only a few degrees centigrade away from the coldest temperatures possible. Study of systems at these temperatures have revealed some amazing behaviors. For purposes of our discussion, liquid helium is an interesting example because it exhibits superfluidity, which allows objects to move through it without resistance. But superconductivity – materials that pass electricity without resistance – is another consequence of the basic principles that determine the behavior of really cold systems. Both liquid helium and superconductivity, by the way, are extremely important technologies in building facilities such as CERN.

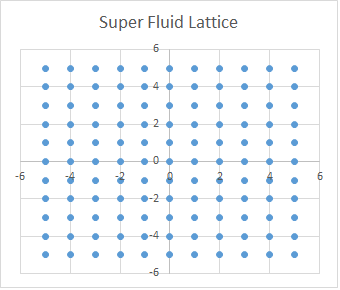

Liquid helium is particularly simple because it bonds only very weakly, which is why it is liquid at temperatures that cause almost every other element to freeze. For illustration, I’m going to show a model system that shows atoms in a square two-dimensional lattice. The details may not apply to liquid helium, but I have reason to believe that they might to dark energy.

Imagine that we have a tank filled with liquid helium. At very cold temperatures, the atoms stack uniformly in the tank.

Such arrangements are said to have high order. They are typical of crystalline materials, including many solids. One of the upshots is that it’s difficult to move a single atom without moving the entire collection. That’s because gravity presses the volume into a compact mass, which means that that atoms are compacted slightly, and therefore repelling each other. So moving one helium atom causes the atom it’s moving towards to move away. The cold here is important: if the lattice were vibrating somewhat, there would be little gaps that could absorb some of the distortion, and so the parts of the lattice could change independently. It’s the lack of such vibrations that forces the lattice as a whole to respond to changes.

Now let’s imagine that we place an impurity into the lattice.

This time a slight distortion of the arrangement will occur. The atoms nearest the impurity will indeed shift their positions slightly. Since the atoms at the walls of the container can’t move, they will tend to remain in place. So the distortion will be localized. What’s interesting to consider is what might happen if two defects are created. Will the disturbance to the lattice be minimized if the defects are brought together, or if the lattice acts to separate them? The astute student of physics will see that this thought leads to a model for gravity.

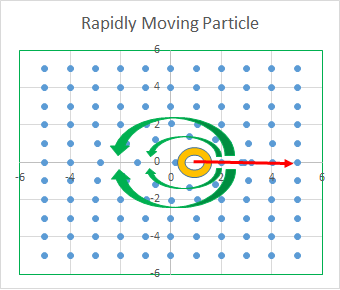

Now let’s propose that somehow our impurity begins to move.

How will the lattice react? Well, again, the atoms at the walls can’t move. The impurity will push against the atom in front of it, and leave a gap behind it. So long as the speed of the impurity is much less than the speed of the sound in the lattice, it is only the nearest atoms that will be disturbed. Obviously, the solution to restoring the order of the lattice is for the forward atoms to migrate to the sides as the impurity passes, displacing the atoms already on the side so that they fill the gap left by the passing impurity. When they reach the back, the atoms will come to rest by giving their energy back to the impurity. This is the essence of superfluidity: the impurity loses energy to the lattice only temporarily.

What is interesting to note is that in quantum mechanics, when calculating collisions between two charged particles, we have to assume that the particles are constantly emitting and re-absorbing photons. This is analogous to the situation in the superfluid: the impurity is constantly losing energy and then regaining it.

Finally, let’s consider an impurity moving closer to the speed of sound in the lattice. In this case, the distortions affect more than the nearest atoms, and the circulation becomes more widespread.

It’s important to note that energy is stored in the circulatory motion of the helium atoms. They are moving, just as the impurity is moving – but in the opposite direction, of course. The closer to the speed of sound, the more energy is stored in the circulation. This means that it becomes harder and harder to make the impurity move faster as it moves more and more nearly at the speed of sound.

In Special Relativity, Einstein showed that particles become harder and harder to accelerate as they come closer and closer to the speed of light. The relationship is (m0 is the mass of the particle at rest):

m = m0/(1-v2/c2)1/2

Again, we see some strong correspondence between superfluidity and the behavior of particles in both special relativity and quantum mechanics. The big difference is that, while Richard Feynman famously stated that quantum mechanics was merely a mathematical procedure without any explanation, when applying the superfluid analogy to dark energy, it seems that at least some previously mysterious quantum and relativistic phenomena are simple to understand.

For more on models of particle mass, see That’s the Spirit.